regularization machine learning mastery

In this post lets go over some of the regularization techniques widely used and the key difference between those. Activity or representation regularization provides a technique to encourage the learned representations the output or activation of the hidden layer or layers of the network to stay small and sparse.

Iot Data Is Not The New Currency Information Is And We Need To Know How To Get It

Ensembles of neural networks with different model configurations are known to reduce overfitting but require the additional computational expense of training and maintaining multiple models.

. Dropout Regularization Case Study. What is Regularization. By the word unknown it means the data which the model has not seen yet.

This is where regularization steps in and makes slight changes to the learning algorithm so that the model generalises better. Regularization is a technique used for tuning the function by adding an additional penalty term in the error function. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small.

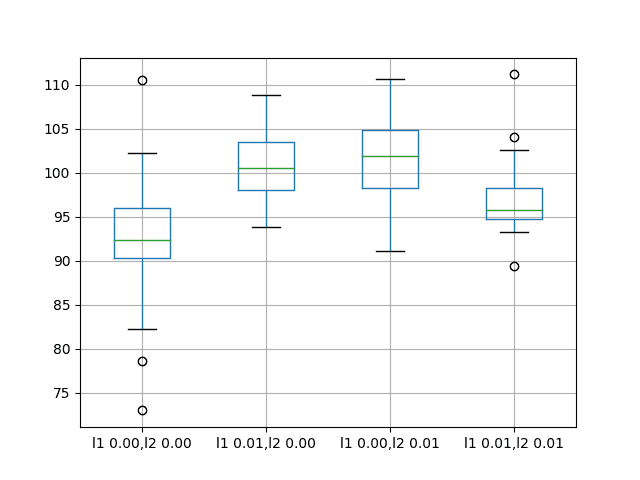

Regularization is any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error If. Deep learning neural networks are likely to quickly overfit a training dataset with few examples. There are multiple types of weight regularization such as L1 and L2 vector norms and each requires a hyperparameter that must be configured.

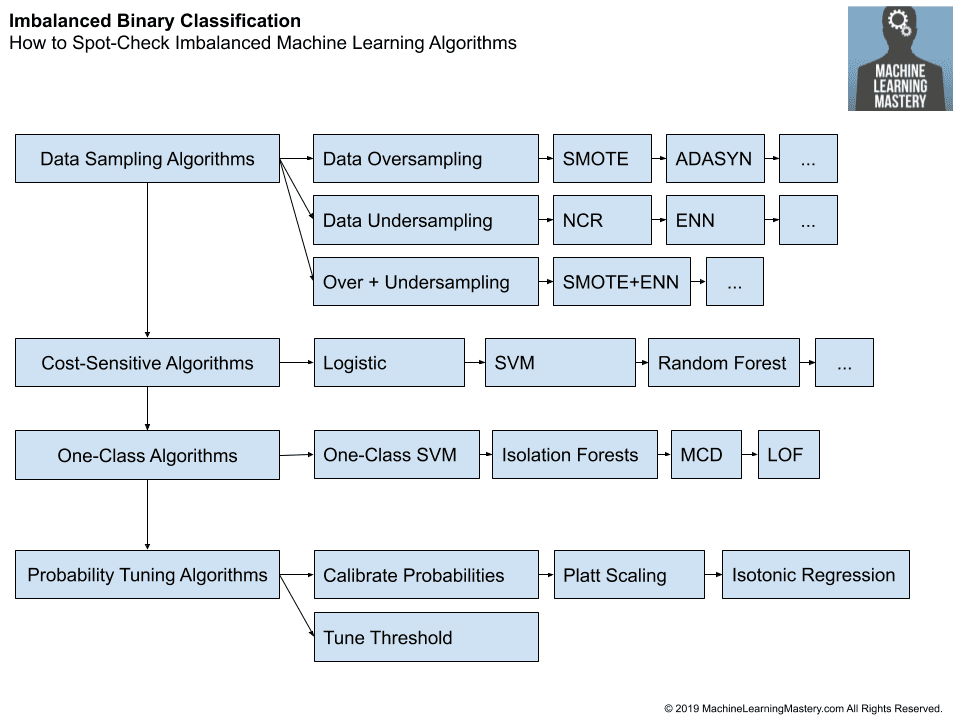

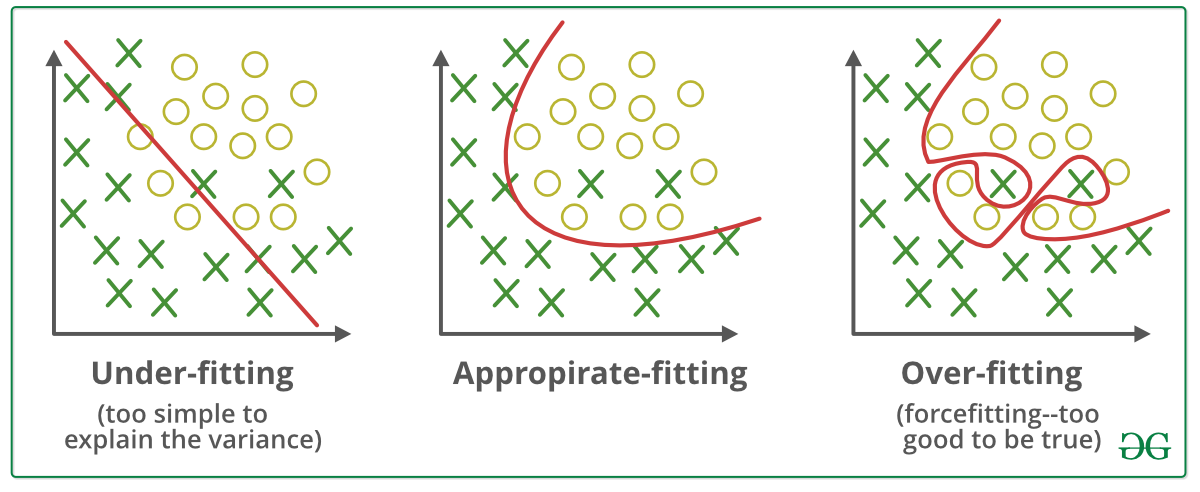

In overfitting the machine learning model works on the training data too well but fails when applied to the testing data. L1 regularization L2 regularization Dropout regularization This article focus on L1 and L2 regularization. The additional term controls the excessively fluctuating function such that the coefficients dont take extreme values.

In order to create less complex parsimonious model when you have a large number of features in your dataset some. L2-and L1-regularization 3Early stopping. The commonly used regularization techniques are.

It is a technique to prevent the model from overfitting by adding extra information to it. Regularization is a process of introducing additional information in order to solve an ill-posed problem or to prevent overfitting Basics of Machine Learning Series Index The intuition of regularization are explained in the previous post. This example provides a template for applying dropout regularization to your own neural network for classification and regression problems.

For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting.

A modern recommendation for regularization is to use early stopping with dropout and a weight constraint. L1 regularization and L2 regularization are two closely related techniques that can be used by machine learning ML training algorithms to reduce model overfitting. In this article Ill explain what regularization is from a software developers point of view.

In this post you will discover activation regularization as a technique to improve the generalization of learned features in neural networks. L1 L2 and Elastic Net regularizers are the ones most widely used in todays machine learning communities. Ask your questions in the comments below and I will do my best to answer.

Regularizers or ways to reduce the complexity of your machine learning models can help you to get models that generalize to new unseen data better. Eliminating overfitting leads to a model that makes better predictions. It is a form of regression that shrinks the coefficient estimates towards zero.

Do you have any questions. No of hidden units 2Norm Penalties. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

But what are these regularizers. Making developers awesome at machine learning. The post Introduction to Regularization to Reduce Overfitting of Deep Learning Neural Networks appeared first on Machine Learning Mastery.

Regularization This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. It is one of the most important concepts of machine learning. Also it enhances the performance of models for new inputs.

It even picks up the noise and fluctuations in the training data and learns it as a concept. You may have other problems that can be diagnosed or you may even need different regularization methods to address your overfitting. A simple relation for linear regression looks like this.

This technique prevents the model from overfitting by adding extra information to it. We all know Machine learning is about training a model with relevant data and using the model to predict unknown data. In my last post I covered the introduction to Regularization in supervised learning models.

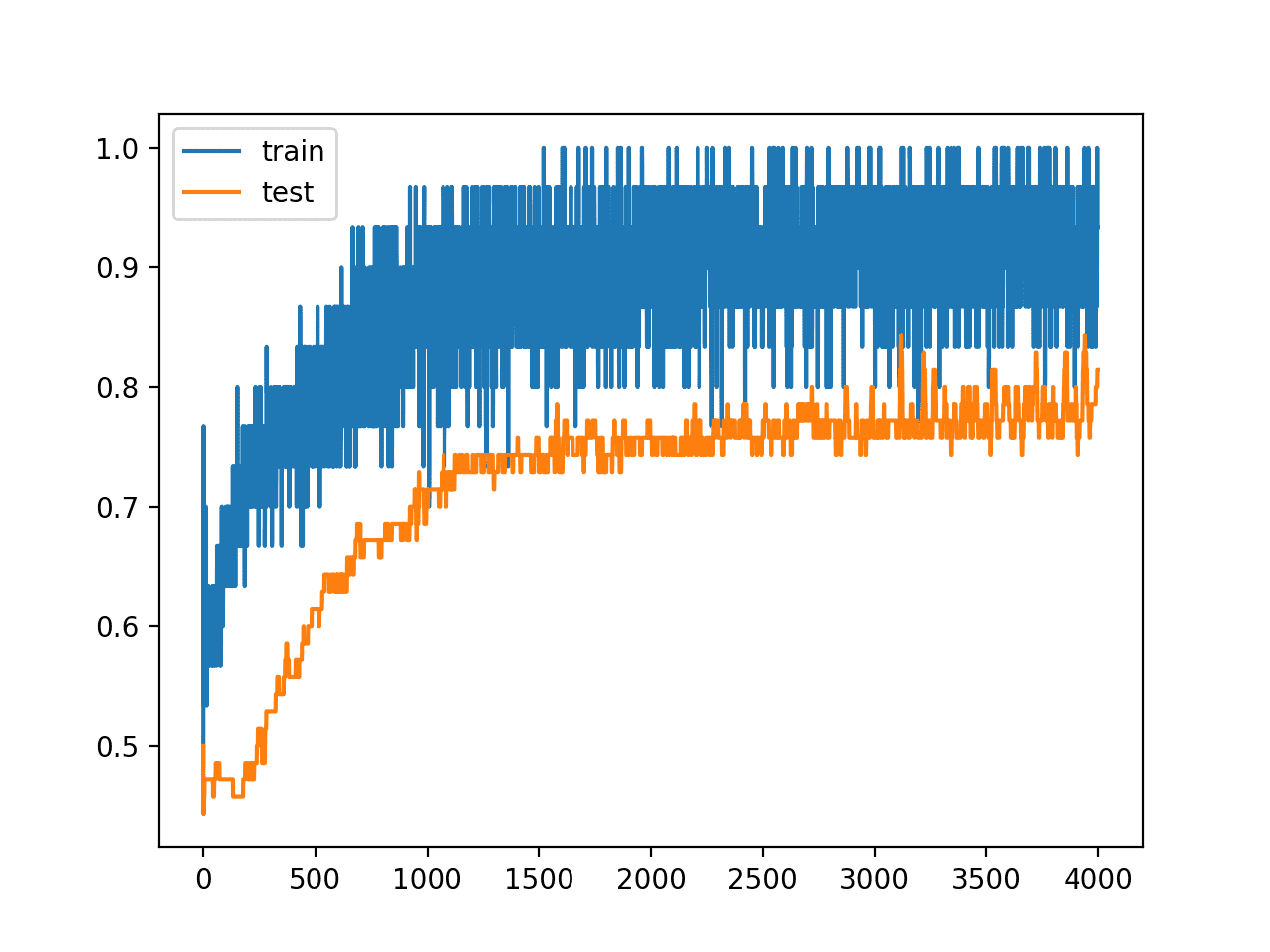

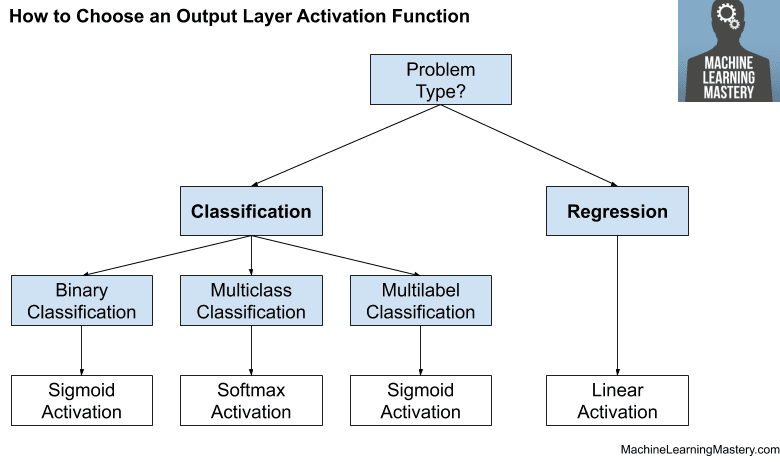

In this section we will demonstrate how to use dropout regularization to reduce overfitting of an MLP on a simple binary classification problem. Why are they needed in the first place. The cost function for a regularized linear equation is given by.

In simple words regularization discourages learning a more complex or flexible model to prevent overfitting. Machine Learning Srihari Topics in Neural Net Regularization Definition of regularization Methods 1Limiting capacity. Weight regularization provides an approach to reduce the overfitting of a deep learning neural network model on the training data and improve the performance of the model on new data such as the holdout test set.

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. Moving on with this article on Regularization in Machine Learning. A single model can be used to simulate having a large number of different.

How to Reduce Overfitting With Dropout Regularization in Keras. A good starting point for improving neural net performance is here. Regularization is one of the most important concepts of machine learning.

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

Linear Regression For Machine Learning

A Tour Of Machine Learning Algorithms

A Gentle Introduction To The Rectified Linear Unit Relu

![]()

Machine Learning Mastery Workshop Enthought

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Start Here With Machine Learning

Weight Regularization With Lstm Networks For Time Series Forecasting